A blog by Elizabeth Denham, UK Information Commissioner

18 June 2021

Facial recognition technology brings benefits that can make aspects of our lives easier, more efficient and more secure. The technology allows us to unlock our mobile phones, set up a bank account online, or go through passport control.

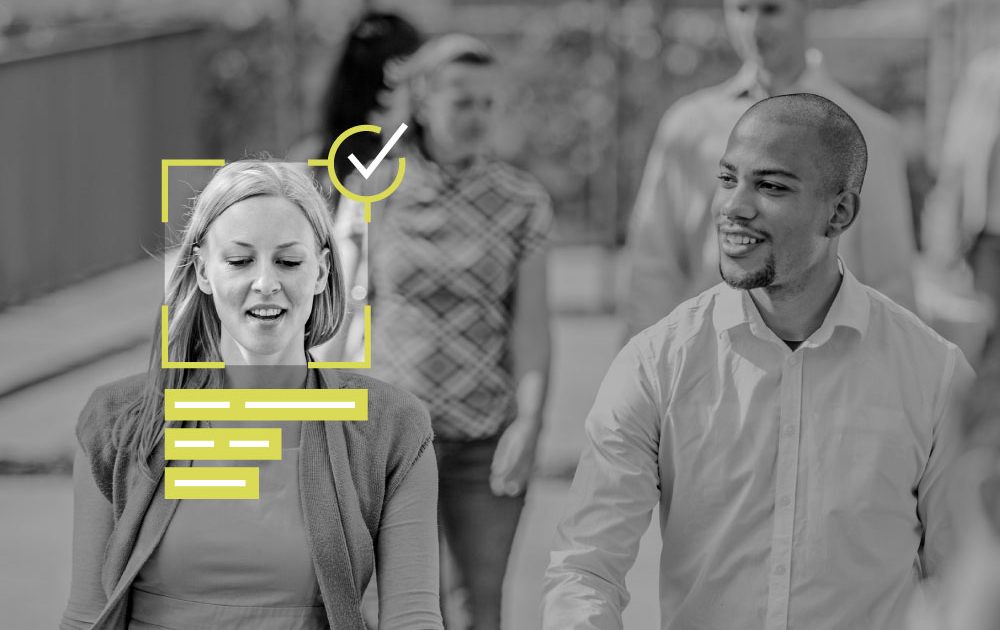

But when the technology and its algorithms are used to scan people’s faces in real time and in more public contexts, the risks to people’s privacy increases.

I am deeply concerned about the potential for live facial recognition (LFR) technology to be used inappropriately, excessively or even recklessly. When sensitive personal data is collected on a mass scale without people’s knowledge, choice or control, the impacts could be significant.

We should be able to take our children to a leisure complex, visit a shopping centre or tour a city to see the sights without having our biometric data collected and analysed with every step we take.

Unlike CCTV, LFR and its algorithms can automatically identify who you are and infer sensitive details about you. It can be used to instantly profile you to serve up personalised adverts or match your image against known shoplifters as you do your weekly grocery shop.

In future, there’s the potential to overlay CCTV cameras with LFR, and even to combine it with social media data or other “big data” systems – LFR is supercharged CCTV.

It is not my role to endorse or ban a technology but, while this technology is developing and not widely deployed, we have an opportunity to ensure it does not expand without due regard for data protection.

Therefore, today I have published a Commissioner’s Opinion on the use of LFR in public places by private companies and public organisations. It explains how data protection and people’s privacy must be at the heart of any decisions to deploy LFR. And it explains how the law sets a high bar to justify the use of LFR and its algorithms in places where we shop, socialise or gather.

The Opinion is rooted in law and informed in part by six ICO investigations into the use, testing or planned deployment of LFR systems, as well as our assessment of other proposals that organisations have sent to us. Uses we’ve seen included addressing public safety concerns and creating biometric profiles to target people with personalised advertising.

It is telling that none of the organisations involved in our completed investigations were able to fully justify the processing and, of those systems that went live, none were fully compliant with the requirements of data protection law. All of the organisations chose to stop, or not proceed with, the use of LFR.

With any new technology, building public trust and confidence in the way people’s information is used is crucial so the benefits derived from the technology can be fully realised.

In the US, people did not trust the technology. Some cities banned its use in certain contexts and some major companies have paused facial recognition services until there are clearer rules. Without trust, the benefits the technology may offer are lost.

And, if used properly, there may be benefits. LFR has the potential to do significant good – helping in an emergency search for a missing child, for example.

Today’s Opinion sets out the rules of engagement. It builds on our Opinion into the use of LFR by police forces and also sets a high threshold for its use.

Organisations will need to demonstrate high standards of governance and accountability from the outset, including being able to justify that the use of LFR is fair, necessary and proportionate in each specific context in which it is deployed. They need to demonstrate that less intrusive techniques won’t work.

These are important standards that require robust assessment.

Organisations will also need to understand and assess the risks of using a potentially intrusive technology and its impact on people’s privacy and their lives. For example, how issues around accuracy and bias could lead to misidentification and the damage or detriment that comes with that.

My office will continue to focus on technologies that have the potential to be privacy invasive, working to support innovation while protecting the public. Where necessary we will tackle poor compliance with the law.

We will work with organisations to ensure that the use of LFR is lawful, and that a fair balance is struck between their own purposes and the interests and rights of the public. We will also engage with Government, regulators and industry, as well as international colleagues to make sure data protection and innovation can continue to work hand in hand.

|

Elizabeth Denham was appointed UK Information Commissioner on 15 July 2016, having previously held the position of Information and Privacy Commissioner for British Columbia, Canada. |

Notes to Editors

- The Information Commissioner’s Office (ICO) upholds information rights in the public interest, promoting openness by public bodies and data privacy for individuals.

- The ICO has specific responsibilities set out in the Data Protection Act 2018, the UK General Data Protection Regulation (GDPR), the Freedom of Information Act 2000, Environmental Information Regulations 2004 and Privacy and Electronic Communications Regulations 2003.

- Since 25 May 2018, the ICO has the power to impose a civil monetary penalty (CMP) on a data controller of up to £17million (20m Euro) or 4% of global turnover.

- The DPA2018 and UK GDPR gave the ICO new strengthened powers.

- The data protection principles in the UK GDPR evolved from the original DPA, and set out the main responsibilities for organisations.

- To report a concern to the ICO, go to ico.org.uk/concerns.