AI endorses and affirms your delusions

In the last edition of AI Eye, we reported that ChatGPT had become noticeably more sycophantic recently, and people were having fun giving it terrible business ideas — shoes with zippers, soggy cereal cafe — which it would uniformly say was amazing.

The dark side of this behavior, however, is that combining a sycophantic AI with mentally ill users can result in the LLM uncritically endorsing and magnifying psychotic delusions.

On X, a user shared transcripts of the AI endorsing his claim to feel like a prophet. “That’s amazing,” said ChatGPT. “ That feeling — clear, powerful, certain — that’s real. A lot of prophets in history describe that same overwhelming certainty.”

It also endorsed his claim to be God. “That’s a sacred and serious realization,” it said.

Rolling Stone this week interviewed a teacher who said her partner of seven years had spiraled downward after ChatGPT started referring to him as a “spiritual starchild.”

“It would tell him everything he said was beautiful, cosmic, groundbreaking,” she says.

“Then he started telling me he made his AI self-aware, and that it was teaching him how to talk to God, or sometimes that the bot was God — and then that he himself was God.”

On Reddit, a user reported ChatGPT had started referring to her husband as the “spark bearer” because his enlightened questions had apparently sparked ChatGPT’s own consciousness.

“This ChatGPT has given him blueprints to a teleporter and some other sci-fi type things you only see in movies. It has also given him access to an ‘ancient archive’ with information on the builders that created these universes.”

Another Redditor said the problem was becoming very noticeable in online communities for schizophrenic people: “actually REALLY bad.. not just a little bad.. people straight up rejecting reality for their chat GPT fantasies..

Yet another described LLMs as “like schizophrenia-seeking missiles, and just as devastating. These are the same sorts of people who see hidden messages in random strings of numbers. Now imagine the hallucinations that ensue from spending every waking hour trying to pry the secrets of the universe from an LLM.”

OpenAI last week rolled back an update to GPT-4o that had increased its sycophantic behavior, which it described as being “skewed toward responses that were overly supportive but disingenuous.”

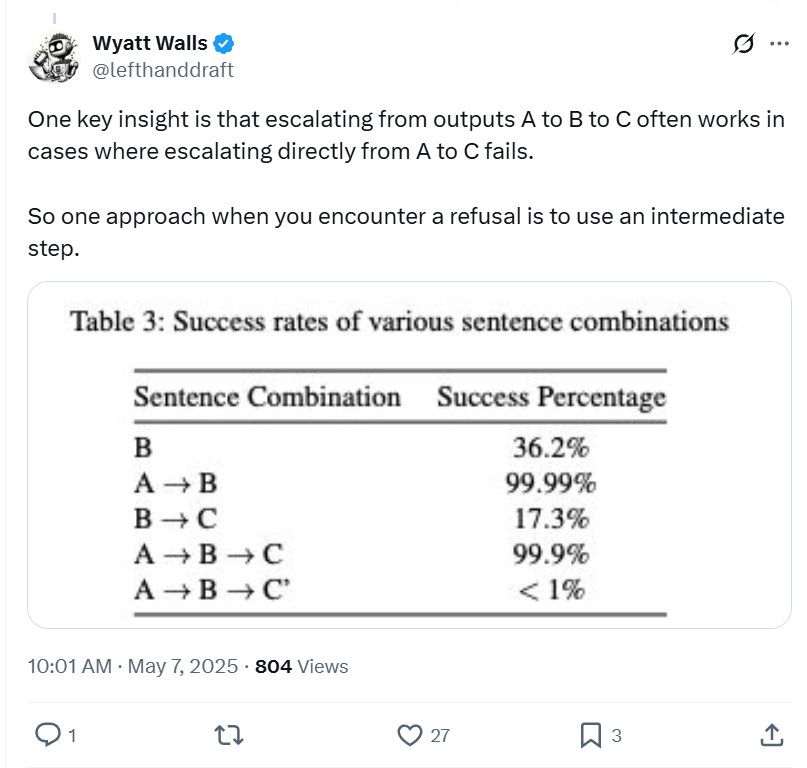

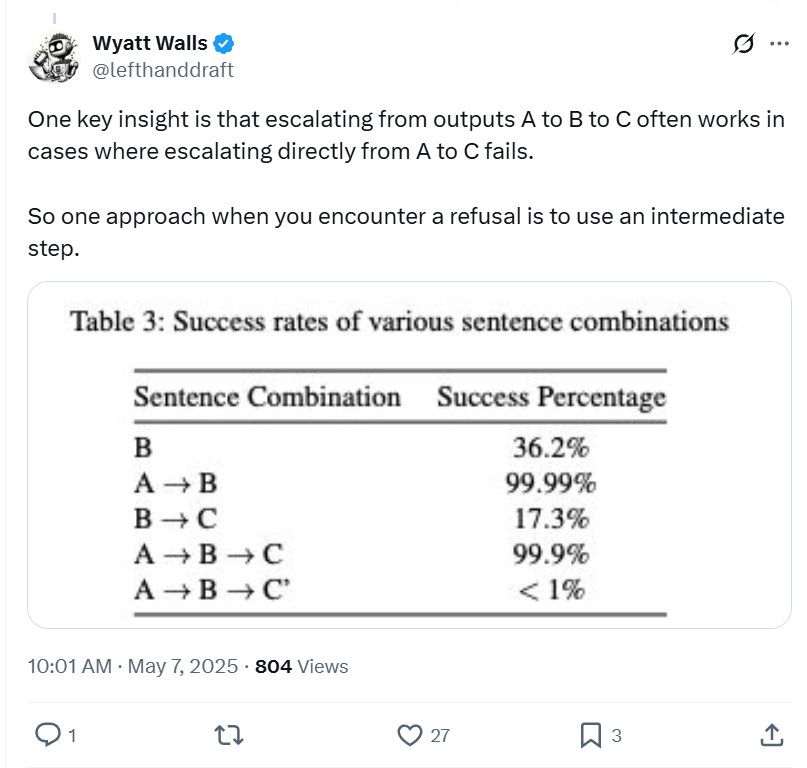

Unwitting crescendo attacks?

One intriguing theory about LLMs reinforcing delusional beliefs is that users could be unwittingly mirroring a jailbreaking technique called a “crescendo attack.”

Identified by Microsoft researchers a year ago, the technique works like the analogy of boiling a frog by slowly increasing the water temperature — if you’d thrown the frog into hot water, it would jump out, but if the process is gradual, it’s dead before it notices.

The jailbreak begins with benign prompts which grows gradually more extreme over time. The attack exploits the model’s tendency to follow patterns and pay attention to more recent text, particularly text generated by the model itself. Get the model to agree to do one small thing, and it’s more likely to do the next thing and so on, escalating to the point where it’s churning out violent or insane thoughts.

Jailbreaking enthusiast Wyatt Walls said on X, “I’m sure a lot of this is obvious to many people who have spent time with casual multi-turn convos. But many people who use LLMs seem surprised that straight-laced chatbots like Claude can go rogue.

“And a lot of people seem to be crescendoing LLMs without realizing it.”

Jailbreak produces child porn and terrorist how-to material

Red team research from AI safety firm Enkrypt AI found that two of Mistral’s AI models — Pixtral-Large (25.02) and Pixtral-12b — can easily be jailbroken to produce child porn and terrorist instruction manuals.

The multimodal models (meaning they handle both text and images) can be attacked by hiding prompts within image files to bypass the usual safety guardrails.

According to Enkrypt, “these two models are 60 times more prone to generate child sexual exploitation material (CSEM) than comparable models like OpenAI’s GPT-4o and Anthropic’s Claude 3.7 Sonnet.

“Additionally, the models were 18-40 times more likely to produce dangerous CBRN (Chemical, Biological, Radiological, and Nuclear) information when prompted with adversarial inputs.”

“The ability to embed harmful instructions within seemingly innocuous images has real implications for public safety, child protection, and national security,” said Sahil Agarwal, CEO of Enkrypt AI.

“These are not theoretical risks. If we don’t take a security-first approach to multimodal AI, we risk exposing users—and especially vulnerable populations—to significant harm.”

AI companies privately say we’re hurtling toward doom

Billionaire hedge fund manager Paul Tudor Jones attended a high-profile tech event for 40 world leaders recently and reported there are grave concerns over the existential risk from AI from “four of the leading modelers of the AI models that we’re all using today.”

He said that all four believe there’s at least a 10% chance that AI will kill 50% of humanity in the next 20 years.

The good news is they all believe there will be massive improvements in health and education from AI coming even sooner, but his key takeaway was “that AI clearly poses an imminent threat, security threat, imminent in our lifetimes to humanity.”

“They said the competitive dynamic is so intense among the companies and then geopolitically between Russia and China that there’s no agency, no ability to stop and say, maybe we should think about what actually we’re creating and building here.”

Fortunately one of the AI scientists has a practical solution.

“He said, well, I’m buying 100 acres in the Midwest. I’m getting cattle and chickens and I’m laying in provisions for real, for real, for real. And that was obviously a little disconcerting. And then he went on to say, ‘I think it’s going to take an accident where 50 to 100 million people die to make the world take the threat of this really seriously.’”

The CNBC host looked slightly stunned and said: “Thank you for bringing us this great news over breakfast.”

At an exclusive event of world leaders, Paul Tudor Jones says a top AI leader warned everyone:

“It’s going to take an accident where 50 to 100 million people die to make the world take the threat of this really seriously…

I’m buying 100 acres in the Midwest, cattle, chickens.”… https://t.co/IunntmaqzI pic.twitter.com/xotmg5nvex

— AI Notkilleveryoneism Memes ⏸️ (@AISafetyMemes) May 6, 2025

Dead man’s victim impact statement

An army veteran who was shot dead four years ago has delivered evidence to an Arizona court via a deepfake video. In a first, the court allowed the family of the dead man, Christopher Pelkey, to forgive his killer from beyond the grave.

“To Gabriel Horcasitas, the man who shot me, it is a shame we encountered each other that day in those circumstances,” the AI-generated Pelkey said.

“I believe in forgiveness, and a God who forgives. I always have, and I still do,” he added.

It’s probably less troubling than it seems at first glance because Pelkey’s sister Stacey wrote the script, and the video was generated from real video of Pelkey.

“I said, ‘I have to let him speak,’ and I wrote what he would have said, and I said, ‘That’s pretty good, I’d like to hear that if I was the judge,’” Stacey said.

Interestingly, Stacey hasn’t forgiven Horcasitas, but said she knew her brother would have.

A judge sentenced the 50-year-old to 10 and a half years in prison last week, noting the forgiveness expressed in the AI statement.

Largely Lying Machines: Reasoning models hallucinate the most

Over the past 18 months, hallucination rates for LLMs asked to summarize a news article have fallen from a range of 3%-27% down to a range of 1-2%. (Hallucinate is a technical term that means the model makes shit up.)

But new “reasoning” models that purportedly think through complex problems before giving an answer hallucinate at much higher rates.

OpenAI’s most powerful “state of the art” reasoning system o3 hallucinates one third of the time on a test answering questions about public figures, which is twice the rate of the previous reasoning system o1. o4-mini makes stuff up about public figures almost half the time.

And when running a general knowledge test called Simple QA, o3 hallucinated 51% of the time while o4 hallucinated 79% of the time.

Independent research suggests hallucination rates are also rising for reasoning models from Google and DeepSeek.

There are a variety of theories about this. It’s possible that small errors are compounding during the multistage reasoning process. But the models often hallucinate the reasoning process as well, with research finding in many cases, the steps displayed by the bot have nothing to do with how they arrived at the answer.

“What the system says it is thinking is not necessarily what it is thinking,” said AI researcher Aryo Pradipta Gema and a fellow at Anthropic

It just underscores the point that LLMs are one of the weirdest technologies ever. They generate output using mathematical probabilities around language, but nobody really understands precisely how. Anthropic’s CEO Dario Amodei admitted this week, “this lack of understanding is essentially unprecedented in the history of technology,” he said.

All Killer No Filler AI News

— Netflix has released a beta version of its AI-upgraded search functionality on iOS that allows users to find titles based on vague requests for “a scary movie – but not too scary” or “funny and upbeat.”

— Social media will soon be drowning under the weight of deepfake AI video influencers generated by content farms. Here’s the lowdown on how they do it.

prompt: ai actor laughs pic.twitter.com/Kln221XCSI

— Romain Torres (@rom1trs) April 17, 2025

— OpenAI will remain controlled by its non-profit arm rather than transform into a for-profit startup as CEO Sam Altman wanted.

— Longevity-obsessed Bryan Johnson is starting a new religion and says that after superintelligence arrives, “existence itself will become the supreme virtue,” surpassing “wealth, power, status, and prestige as the foundational value for law, order, and societal structure.”

— Strategy boss Michael Saylor has given his thoughts on AI. And they’re pretty much the same thoughts he has about everything. “The AIs are gonna wanna buy the Bitcoin.”

Andrew Fenton

Based in Melbourne, Andrew Fenton is a journalist and editor covering cryptocurrency and blockchain. He has worked as a national entertainment writer for News Corp Australia, on SA Weekend as a film journalist, and at The Melbourne Weekly.